Explore our IP Address Database Downloads for instant access to our IP address insights

Learn moreHow to choose the best file format for your IPinfo database?

This article is a followup to our “Data downloads vs API, or both?”. Please check out that article if you are looking to decide between API or database products.

When it comes to using our database products, customers are prompted with three database formats:

- CSV

- JSON

- MMDB

Through these 3 main dataset file formats, our products such as IP to Geolocation, IP to Company, Privacy Detection, and other databases are delivered to our customers. But which one should you use?

With our API products data ingestion is fairly straightforward, but with our downloadable databases there are more interesting features and options that should be considered carefully to match your specific data needs.

This article covers the features and specifics of individual file formats of our downloadable databases. If you’d like to know more about our marketplace integrations such as Snowflake integrations, stay tuned!

Summary of the file formats

The summary of the different features of the 3 different file formats is the following:

| MMDB | CSV | JSON (NDJSON) | |

|---|---|---|---|

| File structure | Binary | Plaintext | Plaintext |

| Data structure | Flat | Flat | Flat |

| Usage | IP address lookups | Ingestion to a database or data warehouse | Ingestion to a database or a data warehouse |

| Query speed | Fastest | Depends on the DBMS | Depends on the DBMS |

| Data operations | Can only be used for IP address queries | Easy to modify, manage and explore | Easy to modify, manage and explore |

| Industry usecase | Niche. Only limited to IP geolocation usage. | Universally adopted | Somewhat adopted |

This is a very high-level summary of the different file formats, so we recommend you keep reading to discover the most suitable option for your use case.

CSV Format

Comma Separated Value (CSV) is a plain text file that uses commas to separate (or delimit) each field of value of a record with a comma character. Each record itself is represented as a new line or technically delimited with a \\n character. CSV file usually is used to store data in a tabular format, meaning that each record represented as a new line will have an equal number of fields or columns.

Even though CSV is defined by comma-separated value, various other characters such as tab \\t, space, tilda ~, semi-colon (;) etc. are used to separate individual fields or columns.

It is considered to be the de-facto plain text file standard for data exchanges. The RFC 4180 is a technical standard that formalizes the CSV format.

Advantages of CSV Format

CSV is considered the default data exchange file format. It is easy to parse when prepared correctly, and it even compresses well with a compression program like gzip. The CSV file format is universally accepted for everything from data pipelines to IT tools. It is extremely easy to use when it comes to ingesting in DBMS (Database Management Systems) such as PostgreSQL, MySQL, SQL Server, etc. Whether it be a GUI prompt or an SQL query CSV ingestion, importing to a database is a fairly common operation.

CSV being standardized means that you can easily connect our database downloads to your cloud platform and create a data pipeline through IPinfo Database Downloads ⇒ Cloud Storage (AWS S3, GCP GCS, ...) ⇒ Cloud Database or Data warehouse (GCP BigQuery, AWS Redshift, ...). For instance, you can ingest our database to Snowflake. But the best option, in that case, would be to use our Snowflake Integration.

Disadvantages of the CSV format

CSV is considered to be one of the best data exchange formats due to its simplicity when it comes to parsing, but that is essentially a double-edged sword. Many CSV processing engines or native solutions make CSV file parsing hard because of the comma-separated value declaration.

With CSV files, bugs emerge due to not having a unified agreement of how data is represented and how to treat control characters such as newline, comma, escape characters etc. Parsing and processing control characters and encoding issues can present difficulties. According to RFC 4180 (the standard for CSV files):

While there are various specifications and implementations for the CSV format , there is no formal specification in existence, which allows for a wide variety of interpretations of CSV files.

Essentially, for parsing CSV files there needs to be prior communication and understanding, which is often not done. This lack of agreement leads to confusion when processing CSV datasets.

Comma-separated values are difficult to parse as many cells of data can contain commas as a part of their value, but CSV parsing considers commas to be a special character. So this can result in creating a non-uniform number of columns.

When we deliver our databases, we take careful measures to declare our column values appropriately. If a text in a column contains a comma character, double quotes, line breaks (CRLF) which are all special characters in CSV that column needs to be wrapped in double quotation marks " . By enclosing the text in double quotation we declare that, it is a distinct text and shouldn’t be parsed. This is an example of a well-formatted CSV file (our hosted domains database):

ip,total,domains

79.2.55.71,2,"designabaco.it,designabaco.com"

47.57.71.53,2,"youcenter.info,2020user.com"

35.181.34.83,1,epicphorm.com

170.130.30.67,1,fzxrd.com

198.211.124.73,1,threadup.ae

34.72.15.84,3,"batesmoving.com,batesmoving.net,batesmoving.co"

Another issue is that even though CSV stands for “Comma” separated values, the delimiter or separator characters can be different in different file formats. CSV files can use semicolons, or tildes as their delimiter or separator characters as well.

In many cases, CSV parsing engines fails to recognize the separator values, and users are expected to know beforehand what delimiter the CSV file is using so that they could explicitly pass the separator values as delimiter parameter. At IPinfo, we stick with commas as a separator since it is the industry standard.

MMDB Data format

Of the three database download formats provide, mmdb (MaxMind DB) format is the only non-plaintext or binary file format. The MaxMind DB file format was created by MaxMind™, an IP Geolocation service provider.

MMDB file format can be considered an industry standard when it comes to mapping IP addresses as both IPv4 and IPv6 addresses to data records. It is a binary file format that utilizes an efficient binary search tree operation for IP lookup. It is not a plaintext file but a binary file, meaning that you can't simply open up the file in a text editor to explore it. You need specialized tools or utilities to make use of this format. But it is a binary optimized for IP address lookups, making it unparalleled in performance compared to the lookups from plaintext files or databases.

MMDB in IPinfo: mmdbctl

We have developed our in-house Open Source utility tool called mmdbctl which allows you to:

- Read data of IP addresses (IPv4 and IPv6) from an MMDB database file

- Import data into plain text files such as CSV, TSV, and JSON from the binary MMDB file

- Export data from flat plaintext files such as CSV, TSV, and JSON to an MMDB file

- Also running additional operations such as:

- Printing metadata from an MMDB file

- Comparing the difference between two MMDB files

- Validating an MMDB file

For more information and instructions, please visit: https://github.com/ipinfo/mmdbctl

Advantages of MMDB Format

At IPinfo we use a modified version of the MMDB database that facilitates faster IP address lookups. Our flat MMDB file structure is tabular in nature. Because our MMDB files do not contain a nested data structure, that means that query performance is relatively faster. Plus, the tabular structure reduces complexities in data ingestion and storage.

IP address lookups on an MMDB file are blazingly fast compared to even database lookups. Our plaintext files aren't meant to be directly used in lookups, but they are supposed to be ingested in a database management system (DBMS) like PostgreSQL, MySQL, Snowflake, Bigquery, Clickhouse, etc.

Compared to MMDB lookups, database lookups are slow because the MMDB file format is optimized for only IP address lookups and it employs the BST (Binary Search Tree) algorithm. A DBMS has a lot to offer in terms of lookups of various columns, data management, viewing, joining, integrating with other services, etc. But MMDB excels in IP lookups because it was designed to do that one thing.

So, if you are looking to make singular queries of IP lookups, the most performant solution is to use the MMDB file format.

Also MMDB IP address lookups can include both IP IPv4 and IPv6 addresses, while database solutions of some DBMS do not have native IP address data type support. In those cases, IP address strings (or VARCHAR to be exact) need to be converted to their integer equivalent.

Disadvantages of the MMDB format

Even though we unanimously agree that MMDB-based IP address lookups outperform nearly every other format when it comes to the usage and management of data, MMDB is not always suitable.

That’s because MMDB is used exclusively for IP address lookups and nothing more. This file format, since it’s not plaintext, cannot be ingested into a DBMS. But the biggest issue with the MMDB file type is that it can not be explored and managed like a database. The result is that database operations and management are harder if not impossible to do with the MMDB file type. Except for IP address lookups you can not execute any other queries on the other columns such as city, region, country, carrier name, etc. Think of MMDB file type as a search engine for only IP address data.

Another aspect is that, for IP address queries on MMDB databases, you need specialized tools, such as:

- mmdbctl from IPinfo

- mmdblookup

- LibMaxMindDB

- runk/node-maxmind

We have created mmdbctl to provide some data management capabilities to the MMDB file format but it is not a replacement for a traditional DBMS solution.

JSON database

What is NDJSON

At first glance, you might be wondering why we offer a JSON database. Traditionally, JSON is used in web requests and is not fit for files that contain several hundred megabytes to gigabytes of data. But in practical usage, you can’t beat JSON when it comes to simplicity and parseability. However, there is a catch: we are not exactly using standard JSON. Instead, it’s a variation of JSON called ndjson.

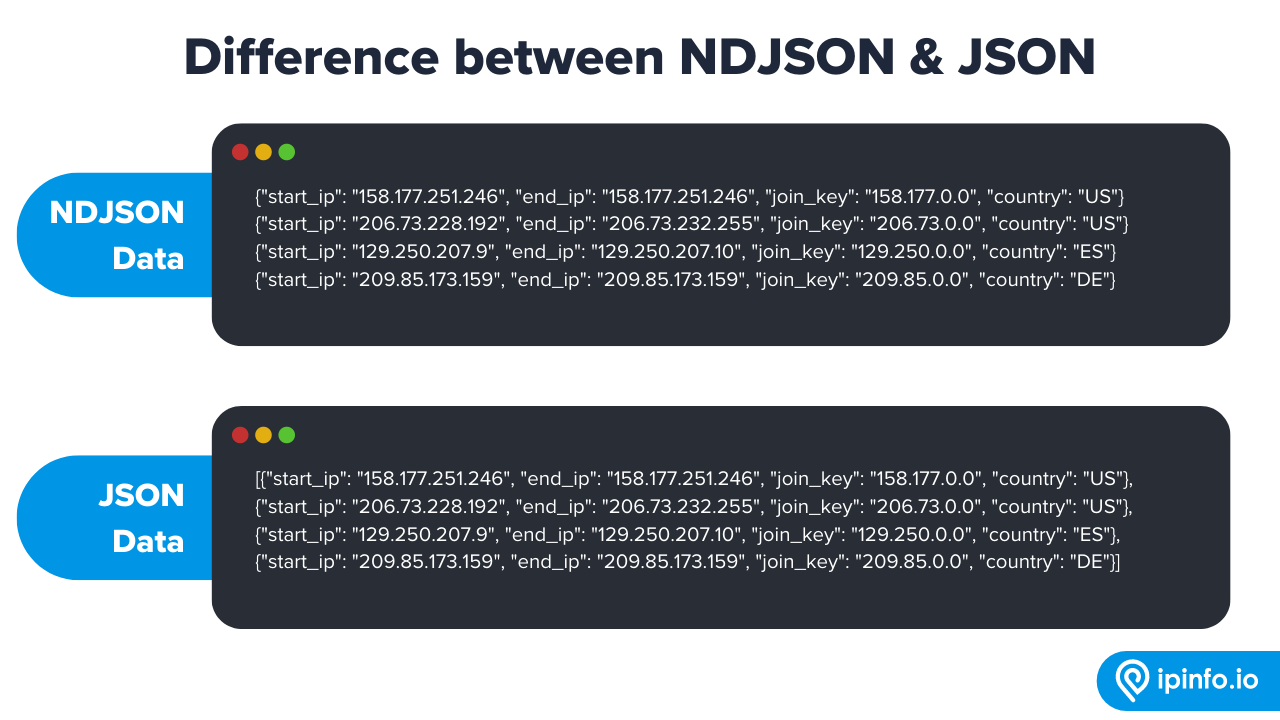

“NDJSON” stands for Newline Delimited JSON. At first glance, NDJSON looks identical to JSON. But if you look carefully, the JSON data is an array object. NDJSON, on the other hand, isn’t an array but rather a series of JSON objects separated (technically known as delimited) by a newline character (\\n).

The advantage of NDJSON over JSON is that the newline delimited structure allows it to be stored or streamed as structured data because each record can be processed one at a time.

It combines the ease of parsing JSON data while enabling effective streaming, storing, logging, conversion, and transmission. The NDJSON libraries can be found here: http://ndjson.org/libraries.html

Advantages of JSON

Many databases, IT tools, and data ingestion engines/solutions struggle with parsing CSV. Even though our CSV is carefully prepared, some solutions struggle with parsing CSV with the default configuration. In many cases, CSV parsing brings up two problems:

- Failure to recognize header columns

- Failure to identify delimiter or separator

NDJSON solves both of these problems effectively since each record contains a uniform structured set of “key” items that are present in all the records. This structure enables parsing solutions to declare database headers easily.

Separator or delimiter identification in CSV files is a classic problem. Usually, CSV files declare every record as separated by a comma (,). The question is what happens when a comma is present in a column value? The comma character can be present in address, location, geolocation (latitude and longitude) etc. columns. In those circumstances, the entire column is typically wrapped in double quotation marks (") to let the parser engine know that this is an individual column. It is not necessary to separate it into columns based on the comma characters within it.

But in some circumstances, some CSV parsers fail to recognize column declarations with double quotes. And by parsing values by the comma character in those strings, the parser ends up generating extra columns. This throws an error as the CSV files are tabular data with a fixed number of columns available across all rows.

To fix that, IPinfo team decided to use NDJSON data format in addition to the CSV data format. If your IT, data, or development solution is struggling with parsing CSV, consider using NDJSON as it allows you to separate each record by newline and parse one JSON object record at a time. Because each record in our NDJSON contains the exact number of key-value pairs (or in the context of CSV data format column) you can reliably parse the JSON object to a flat tabular format.

Disadvantages of JSON

Even with its parsing convenience, NDJSON suffers from a few disadvantages. Even though NDJSON or JSON format is popular in big data processing, but compared to CSV it is not as widely recognized.

Many data ingestion engines are built around the CSV data format. There are sophisticated parsing engines that utilize threading and multiprocessing solution that enables data ingestion to be extremely fast. There are NDJSON parsing engines that can take advantage of multi-processes and threads, but their reach isn't as wide.

Another issue is file size. NDJSON structures each record in key-value pairs. This in tabular format term means that the header row is available in every single record. This data redundancy adds to the size of the complete and uncompressed data.

Here is a comparison of the file size of our compressed JSON data compared to our compressed CSV data:

| Database Product | Compressed File size of JSON over CSV |

|---|---|

| ASN | 13.74% |

| Carrier | 17.02% |

| Company | 11.22% |

| Location | 17.05% |

| Privacy | 17.65% |

| Hosted Domains | 17.65% |

| RWHOIS | 17.91% |

| RIR WHOIS | 19.11% |

Fun fact: For our IP Ranges database, the compressed file size of CSV and JSON is nearly identical. Can you guess why? Feel free to tweet us your answer. Hint: that database contains the least amount of columns (3) of all our products.

With that, we conclude our 3 main database delivery formats, their pros and cons. In some cases, if you want a database, database downloads aren’t your only option. Check out our integrations and partnerships. We have partnered with Snowflake, Maltego, Splunk, Heroku, and many more industry leaders to provide you with the best-in-class IP insights at your convenience.

If you would like to know more or would love some assistance in using our database feel free to reach out to our data experts.

About the author

Abdullah leads the IPinfo internet data community and he also works on expanding IPinfo’s probe network of servers across the globe.